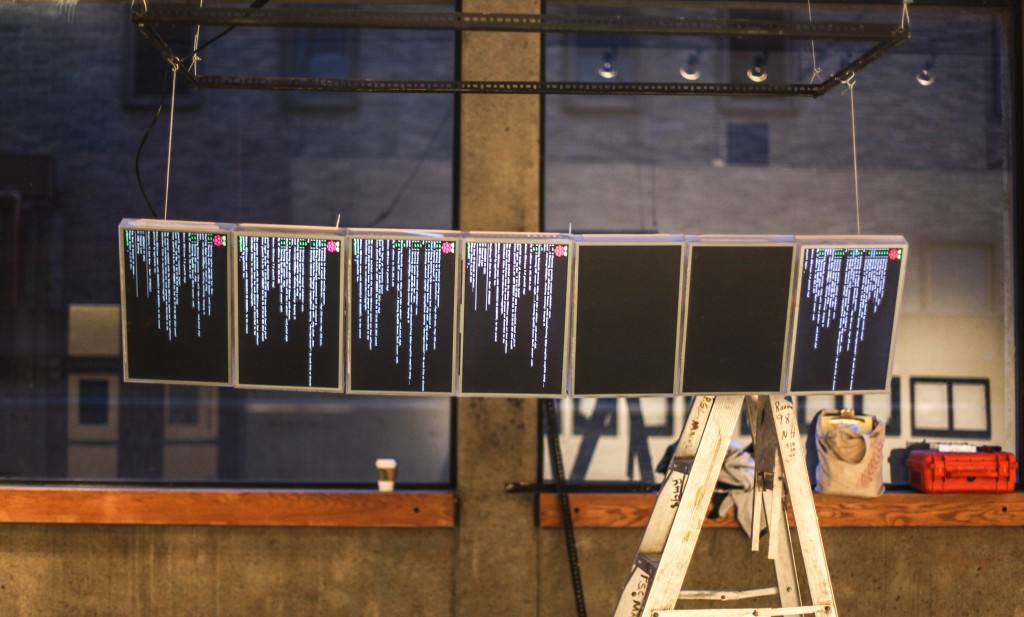

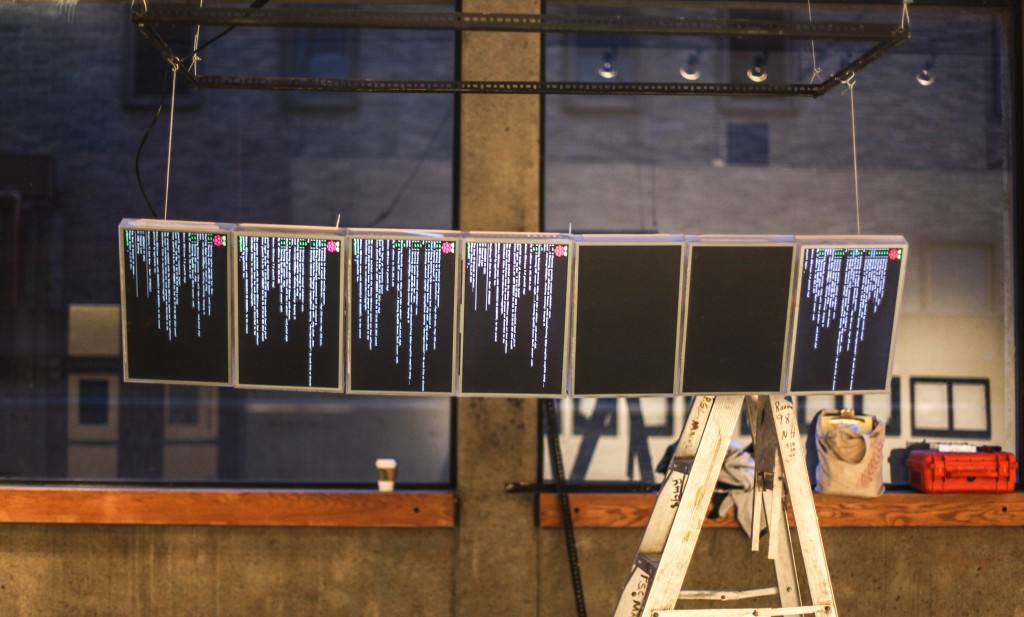

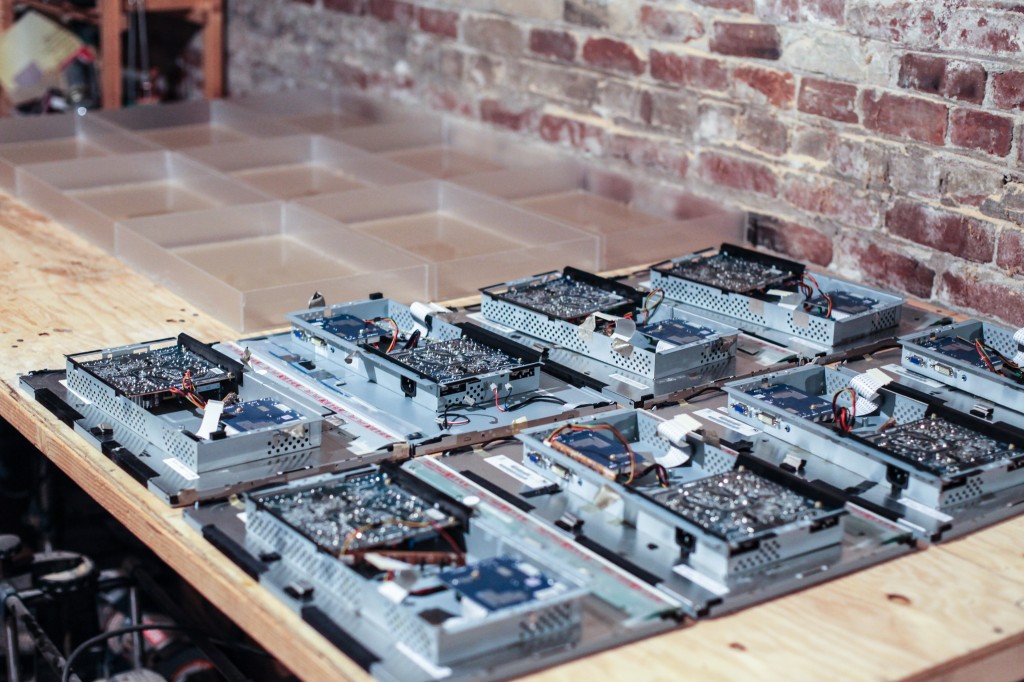

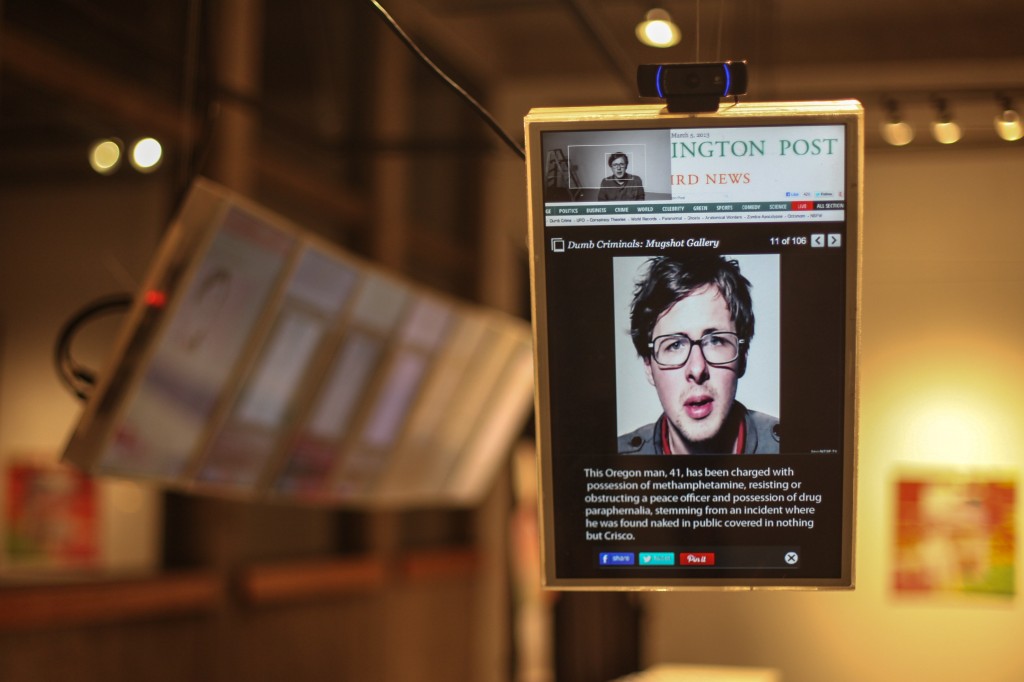

Here’s the wall, installed for the first time. You can see the Rasbian OS booting up.

Stars Without Makeup by Leif Shackelford and Erika M. Anderson Autzen Gallery @ PSU

I took a little break from guitar pedal design, and record making to think about visuals for the next EMA tour

I want to integrate video into our show, but in a slightly different way than the traditional projector or led wall. To get warmed up for a big task like this, I decided to participate in my studio’s (“Holladay Studios”) group show at PSU. I’m also always interested in interactive sculpture, and of course working with somewhat broken industrial junk. I thought I’d document this process with a few technical insights that somebody else might run into, and a few shout-outs to folks who’s resources I have used.

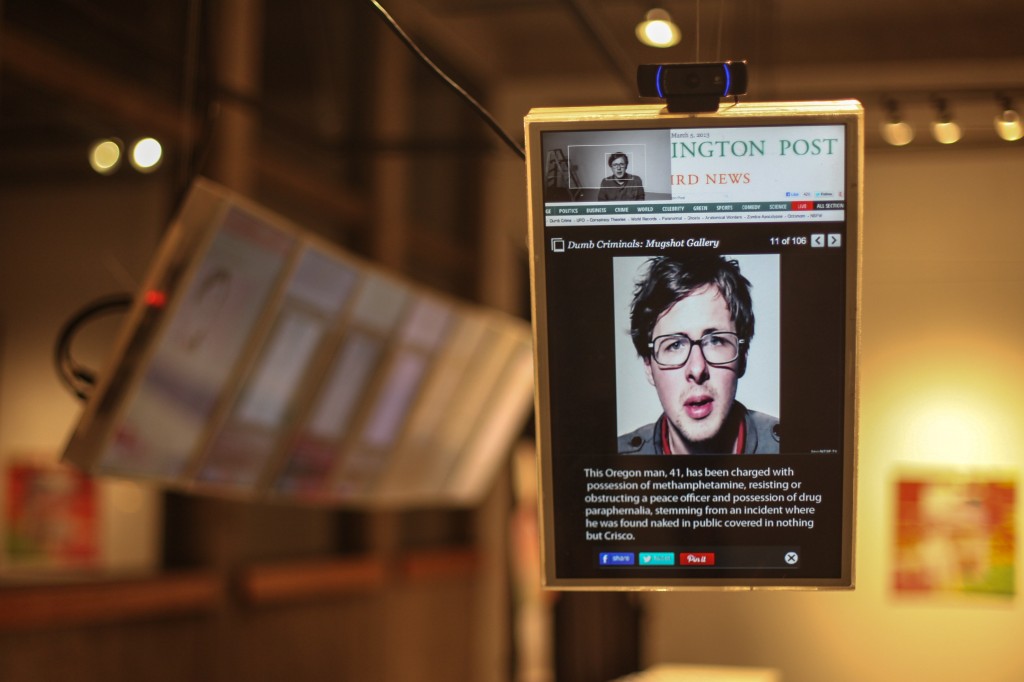

This piece uses an array of small computers. “Raspberry piMac’s” perhaps, that can function separately or together over a network, to create, process, and display images. For Stars Without Makeup a screen captures images of unwitting participants in the gallery and places their images onto tabloids or other “rag” type news articles. The goal is to encourage empathy towards the lack of consent that occurs daily in our sensational media culture. The following is a brief technical description of my process in creating this piece, and some ideas for additional uses of this technology.

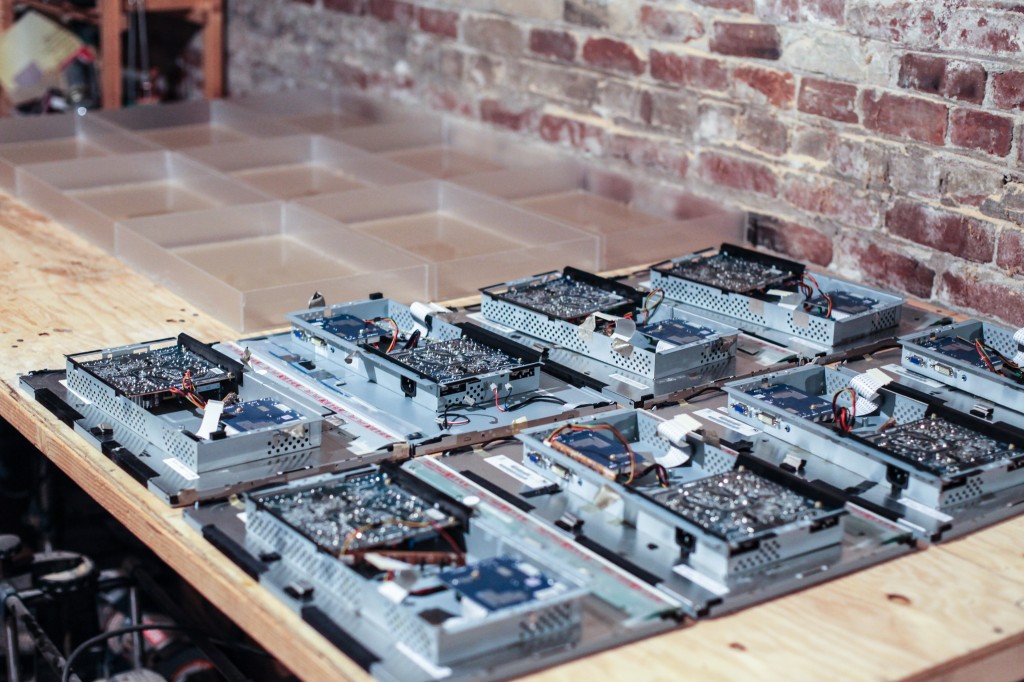

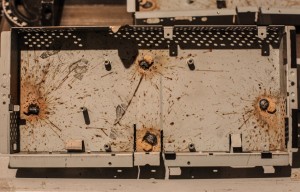

These screens which I bought in “broken” condition, needed new power capacitors, now they’re fixed and ready to be re-housed.

The Screens:

There seems to be a glut of broken and otherwise unusable LCD screens being dumped by offices and corporations. I found a guy who has a small makeshift retail office at a facility that otherwise strips down electronics for their metals, plastics etc. A lot of the stuff they get is totally broken, some of it is in perfect working condition, but others yet are what I would call “sort of broken”, doing a google search for LCD TV capacitor, will show you that a lot of LCD displays that use fluorescent backlights fail for the same reason, under spec or simply bad capacitors. I was able to get 10 of these 19″ widescreens at $10 each, but would ultimately like to use 24″ led lit screens, it just might be hard to find those on the “recycled” market for the time being.

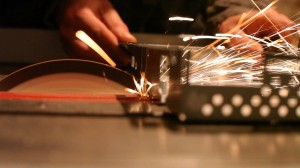

Cut these to size on the table saw. They turned out okay, would have been smoother if I had a more finely toothed blade around.

Fitting the screens into the plexi cases. Acrylic cement is pretty rad.

Making room for the HDMI cable to fit into the case.

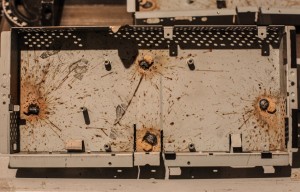

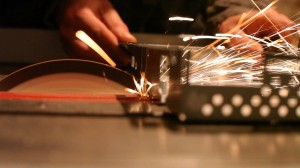

My sloppy welding job, looks pretty cool though.

Welding threads onto the LCD Chassis. My first time with a wire welder, you can see how sloppy I am in the finished photo ;)

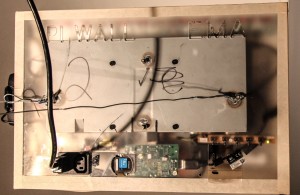

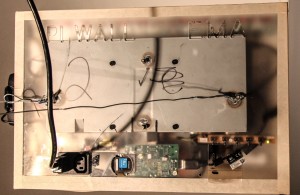

Here you can see the completed case, raspberry’s + power, etc.

My rehousing goals for these screens were:

+ make room for a raspberry and its power supply in the case.

+ add daisy chain-able IEC power ports, for quick setup on tour

+ add plexiglass to front of display for kicking and or beer spilling.

+ add 1/4″ threading for mounting / hanging.

I used 1/8″ acrylic sheets, and modified the screen’s power supply cage. This involved a lot of cutting, welding, milling, solding etc. There’s some photos of me succeeding / failing below.

CPU:

So I got a bunch of dead screens, and repaired them. I need a way to put images on these screens, preferably the ability to put the same image or tiled image on each one. Since a mega computer with >10 video outs seems expensive, complicated, and fail prone, I decided to look at embedded solutions. I’ve done a lot of work with Arduino, and the STM32 variant “Maple”, but doing video needs a little more power. Raspberry pi has a pretty decent community surrounding it right now and it seemed a decent choice since it offers a high resolution digital monitor port and a reasonable amount of speed / memory to work with.

Each $35 Raspberry has been additionally equipped with an 8GB flash card, which is enough for the OS, and presumably a few hours worth h264 video if need be. They need to communicate, so each one also gets a usb wifi adapter, and lastly a disply cable. Adds up to about $65 per unit + the display, which in this case was $10.

Software:

The folks at raspberry pi offer suggestions for software, like most linux boxes there are many flavors available. I chose what seemed like the best combo between features and power, which is their distro called “Rasbian” which is a pi processor (arm) version of the common linux distro “Debian”.

Once booted into raspbian, you can get most packages you need by using the typical auto-install resources. There were just a few that I had to compile from source (such as experimental plugins for gstreamer).

Open Frameworks: In order to do some serious media work I needed a bunch of libraries that allow for openGL window creation, image loading / processing, etc. luckily there are some folks working hard to port Open Frameworks over to the little raspberry, and are doing so with some success!

In particular I was pretty stoked to borrow some code from @jvcleave, and @bakercp. Who are more talented programmers than I and also seem interested in getting OF to do cool stuff on the raspberry pi.

Web Cam

Most web cams are slow, bad, and low resolution (640×480). Thanks mostly in part to skype HD etc. there are now lots of high resolution webcams available, but most require the computer to do some heavy lifting both in usb bandwidth and also in encoding / decoding the stream. I found logitech’s c920 which offers hardware compressed h264, which seems to be one of the few that does so right now, I’m sure it’ll be the norm in years to come.

Open fameworks is cross platform, and as such makes use of different low level libraries depending on the OS, for mac video is handled with quicktime. On linux, libgstreamer is home base for video.

Out of the box, the c920 will provide video to gstreamer via the V4L2camera src pipe. This is okay, and with a fast machine would be no problem. However V4L2 camera source currently does not support H264 streams, so after searching a bit I found the plugin I needed, Kakaroto’s UVCH264 camera source. Available as part of the gstreamer “bad plugins” on the .10 git branch.

I spent countless hours trying to get it compiled (partly because I’m a linux noob) for raspberry pi, and then hacking that pipe into openFrameworks GSTUtils class (actually there’s a setPipe method so that wasn’t too hard). I eventually succeeded and was able to get an H264 source from my logitech c920 into Open Frameworks, but the framerates were still to slow for decent realtime HD video (bummer!). It’s maybe a little to much to ask a raspberry for, but I might keep trying later. At this point I decided to just go for still images, because I could get a raw 1920×1080 about once a second.

Haar-like features (Facial Recognition)

Open Frameworks has an out of the box implementation of the Open CV library http://opencv.org which contains useful things like object and face tracking. For this installation, I want to only keep photos when someone is standing there, also I need to crop to the face area, so full facial recognition, not just blob tracking was required. With a typical haar-cascade file, it takes a raspberry almost 10 seconds to analyze a full HD frame, so I had to minimize the area a bit.

I did so first with a contour finder to get a general idea of where a person was standing, but this led to about the same amount of cpu time as a full frame haar, and let to some false positives. Eventually I just chose a small area where I suspected a person to be standing, and got the process time down to about 3 seconds, which I decided I could live with.

After lighting the area properly in the gallery, it is pretty reliable, no false positives, and I get everyone who manages to read at least a little of the artist statement.

Raspberry Network

The raspberry’s are on an 802.11n network. Each is given a static IP, which is useful not only for communication, but also as an ID tag for software processes that need to change depending on which part of an array the screen is (in this show it’s used to ensure duplicate images don’t show up). But when it comes to tiled video, this is how I’ll know which tile it is.

Since it’s a small amount of data (7 screens = 7 jpegs, every 30 seconds) I simply have the Pi with the camera export its capture directory using NFS. The other raspberry’s mount that folder and just read the images as though they were local. I need to make the system more robust for stage (i.e. fstab entries with background reconnect, and some software exception catchers) but for the gallery it’s okay for now.

Here you can see the full piece. The basic idea is that the photos from the camera get “rolled down” to the other screens via wifi.

Next Up

This install does not use video playback, but the rock shows will, as well as some open GL visualizations. To keep things in sync and pass around data I’ll be using OSC, which is wonderful, and provides a common communication method between Ableton, the raspberry’s, and possible several iDevice controllers.

+= do some research about how to create open GL scenes in which each pi can only be responsible for rendering its view, so I can leverage the power of distributed computing.

+= keep removing metal from these things, so it might be affordable to fly with them (non U.S. tours).

+= see if raspberry can be powerful enough to reasonably parse realtime (high fps) kinect data and push it to the network.

+= think about tying some key variables into a DMX universe so that the whole thing might be interacted with by lighting folks.

+= and the fun part, get some interesting content on them!

a few useful links that helped me with this project:

Set up your Pi from OS X: http://pingbin.com/2012/07/format-raspberry-pi-sd-card-mac-osx/

List of config options for Pi: http://elinux.org/RPiconfig

Getting started with RasPi and OpenFrameworks: https://github.com/openFrameworks-RaspberryPi/openFrameworks/wiki/Raspberry-Pi-Getting-Started

The OF site (mostly for their documentation): http://www.openframeworks.cc

Omx Player (hw accelerated video) for OF: https://github.com/jvcleave/ofxOMXPlayer

Info about c920 and GStreamer: http://www.oz9aec.net/index.php/gstreamer/473-using-the-logitech-c920-webcam-with-gstreamer

Socialize